TensorFlow library for adding FPGA based layers

|

|

4 years ago | |

|---|---|---|

| c++ | 4 years ago | |

| doku | 4 years ago | |

| examples | 4 years ago | |

| hostLib | 4 years ago | |

| tests | 4 years ago | |

| .gitignore | 4 years ago | |

| .gitmodules | 4 years ago | |

| README.md | 4 years ago | |

| config.json | 4 years ago |

README.md

TensorFlow library for adding FPGA based layers

Components

hostLib/Python wrapper modulelayers/Layer definitions

c++/TensorFlow custom operator librarylib/mlfpga/FPGA data transfer library

- /bachelor/vhdl-modules VHDL implementation

Usage

import tensorflow as tf

from tensorflow.keras import models

from hostLib.layers.conv2d import Conv2D as Conv2DFPGA

model = models.Sequential()

model.add(Conv2DFPGA(1))

Installation

clone repository and init submodules

git clone <this url> cd ./tf-fpga git submodule initinstall dependencies (on Ubuntu Linux for example)

sudo apt update sudo apt upgrade -y sudo apt autoremove sudo apt install python3 python3-pip sudo python3 -m pip install --upgrade pip # update pip globally python3 -m pip install tensorflowinstall C++ compiler

sudo apt install g++compile operator and fpga libraries

cd ./c++ ./configure make > /usr/bin/g++ ... -o build/dummyBigOp.o src/dummyBigOp.cpp > ... > /usr/bin/g++ ... -o build/op_lib.so ...update

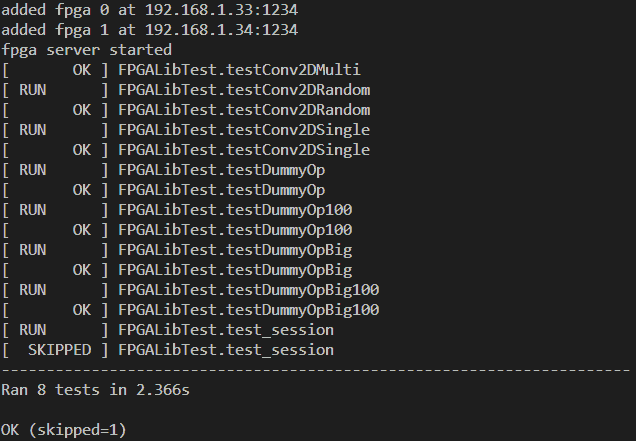

config.jsonwith your FPGA addresses defined in the VHDL design{"fpgas": [ { "ip": "192.168.1.33", "port": 1234 }, { "ip": "192.168.1.34", "port": 1234 }, { "ip": "192.168.1.35", "port": 1234 } ]}

Adding new custom layers

For more details on how to contribute to git projects see https://gist.github.com/MarcDiethelm/7303312.

- create a computation module in the FPGA implementation

add your FPGA module to the list of modules

c++/lib/mlfpga/include/modules.hppthen the

MOD_DEFmacro creates these entries automagically:moduleIds[Module::myNewModule]; moduleNames[Module::myNewModule]; moduleSendPayloadLength[Module::myNewModule]; moduleRecvPayloadLength[Module::myNewModule];create a TF kernel implementation

MyNewOpinherited fromAsyncOpKernel, inside these files:c++/src/myNewOp.cppandc++/include/myNewOp.hppdefine the constructor and overwrite the

ComputeAsyncmethod:class MyNewOp : public AsyncOpKernel { public: explicit MyNewOp(OpKernelConstruction* context); void ComputeAsync(OpKernelContext* context, DoneCallback done) override; }using your FPGA module

auto worker = connectionManager.createWorker(Module::myNewModule, count);register the the kernel with a custom operator:

c++/src/entrypoint.cppREGISTER_OP("MyNewOp") .Input("input: float") .Output("output: float") .SetShapeFn([](InferenceContext* c) { c->set_output(0, c->input(0)); return Status::OK(); }); ; REGISTER_KERNEL_BUILDER(Name("MyNewOp").Device(DEVICE_CPU), MyNewOp); // the custom kernel class /\c++/include/entrypoint.hpp#include "myNewOp.hpp"More information on creating custom TF kernels can be found here.

compile everything

cd ./c++ make clean makeappend a test for your operator

tests/op_test.pydef testMyNewOp(self): with self.session(): input = [1,2,3] result = load_op.op_lib.MyNewOp(input=input) self.assertAllEqual(result, input)add a custom layer that uses the operator

hostLib/layers/myNewLayer.pyclass MyNewLayer(layers.Layer): ... def call(self, inputs): return load_op.op_lib.MyNewOp(input=inputs)add that layer to the python module

hostLib/layers/__init__.py__all__ = ["conv2d", "myNewLayer"]

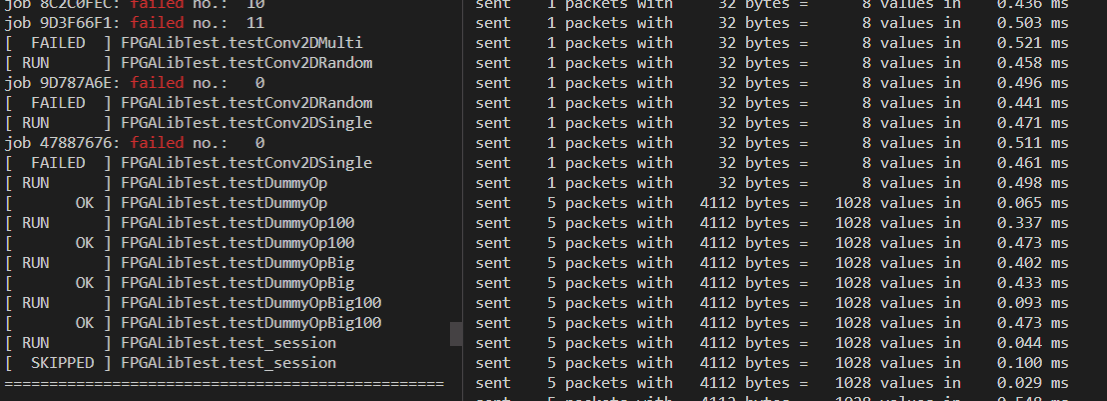

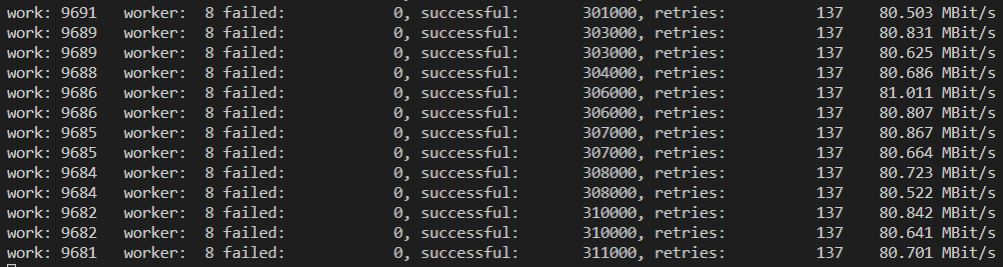

Tests

There are tests for each complexity level of this project.

loopback test without connected FPGAs. This will only succeed for modules that have equal input and output lengths.

compile the UDP echo server and run it in a seperate terminal:

cd ./c++ make echo ./build/echoedit

config.json:{"fpgas": [ { "ip": "localhost", "port": 1234 } ]}then run any dummy module test:

python3 tests/op_test.pyFPGA communication test

c++/tests/main.cppcd ./c++ make test ./build/testoperator validation test, based on TFs test suite

tests/op_test.pypython3 tests/op_test.py

Dependencies

C++

- libstd

- libtensorflow_framework

- https://github.com/nlohmann/json

./config.json

Python3

- tensorflow

c++/build/op_lib.so

Used in examples:

- Pillow

- CV2

- mss

- numpy

- IPython